AV 3.0: Torc’s AI Blueprint

And Torc has cracked the code: we’ve designed an unparalleled system that unlocks safe and reliable autonomous driving for long-haul trucking, which can be quickly scaled to meet the needs of our fleet customers. We call it AV 3.0.

AV 3.0

Torc tapped our world-class AI talent and our long history in robotics and self-driving vehicles to create AV 3.0: our virtual driver software, the advanced data loop, and generative AI simulation infrastructure to train and test it, setting ourselves apart from others in the industry.

Let’s break down the parts and explain the advanced components of each.

The Software Product: Virtual Driver

Virtual driver, our autonomous software product driving the truck, is comprised of three main functional modules: perception, prediction, and planning. It is an end-to-end reinforcement-learned (RL) modular software stack with redundant rule-based (heuristic) guardrails. This allows us to uniquely deliver the optimal mix of autonomous driving performance, system reliability, and driving safety. While the machine learning modules in the stack provide the highest driving performance output, Torc layers in rule-based models and arbitration as redundant guardrails, ensuring the ML model doesn’t violate the basic rules of driving.

The addition of the heuristic guardrails is one of the benefits of Torc’s 20-year heritage in developing autonomous vehicle software products. Building rule-based models is an intensive, manual, and time-consuming process, and Torc has been working with heuristic rules as part of our code since the beginning. Also, the addition of rule-based models is inherently verifiable and interpretable since the rules are hand-coded – another layer of verifiability to support a strong safety case.

The modular architecture of the stack provides fine-grained introspection: the ability to see and validate with transparency and explainability if all the learned and heuristic modules are reacting properly to the input. In comparison, an AV 2.0 end-to-end system is a “black box” with just sensor data in and trajectory out. You can’t understand how the stack is reasoning, only that it is reasoning. There’s no way to check on the intermediary results to check if the module is behaving properly and therefore, no way to effectively debug issues. Every time an issue is found, an AV 2.0 end-to-end system must be retrained as a whole with new data specific to that issue to achieve a correct output, but there is no way of knowing if it truly fixed the issue. This “whack-a-mole” approach adds significant time and uncertainty to the software creation and safety validation process.

With our AV 3.0 stack, we can understand how the system is reasoning within each module, in perception, prediction and planning, and where the problems are, retrain the stack with the right set of generated data to specifically target the issue, and supervise the module-level output to validate the fix.

Perceived object bounding boxes and lane centers from the perception module.

The Simulation Environment: Learning to Create the World

Supporting the training of our virtual driver is our world generation and data-driven simulation environment, comprised of several important and complex pieces.

It Always Starts with the Data. We capture real-world data with the actual production-intent sensor suites on our trucks and have collected a breadth of highway and surface street data across many regions and driving conditions.

In fact, Torc has by far the largest set of real-world driving data in autonomous trucking, spanning many diverse scenarios and corner cases.

We then recreate that real-world data within our data-driven simulation environment, giving us the ability to replay those scenes ad infinitum to test the virtual driver. But that real-world data, used repeatedly on replay, is also the starting point for the generative AI environment we’ve developed, to create infinite sequences of challenging driving scenes and scenarios necessary to train and test the virtual driver to meet the needs of our ODD and safety goals.

As no such capability to generate the realistic data needed was available in the market, the Torc AI team leveraged years of research and development to deliver these capabilities, providing us with a unique time, cost, and data fidelity advantage.

In fact, Torc has by far the largest set of real-world driving data in autonomous trucking, spanning many diverse scenarios and corner cases.

These can be summarized as the following three capabilities:

Neural Rendering

A generative AI approach for realistic scene creation. Based on real world scenes, we can recreate freight-specific, customer-desired sections of road with various “actors” — cars, trucks, animals, pedestrians — and then generate unique additions and/or changes to the scene, like a car braking hard in front, a wrong way driver, a light snow shower that becomes a blizzard, in a photo-and-lidar-realistic output that can be injected into our perception stack.

World Simulation

This approach generates the other reactive objects that react to our truck (the ego vehicle) and then manages the behaviors however we want or need. We can make reactions more or less aggressive on all of the actors, creating infinite scenes and behaviors. For example, one car captured behaving normally on one section of road can then be made to behave aggressively by degrees. It can go from benign to aggressively merging into the truck’s lane in various places by millimeters and timings by milliseconds.

Importantly, all learned behaviors are grounded in real-world physics-based models, so while we could test with Hollywood-style simulations of flying cars or people, we stay grounded in actualities. We instead simulate vehicles skidding into our lane where in the real-world data, they had been driving carefully in their own lanes.

scenario generation

Neural Rendering cropping

Real world footage

“For fully data driven simulation, you need to be able to simulate the behaviors and do so very realistically. You also need to be able to control the reactions, in a data-driven way,” says Felix Heide, Head of AI at Torc, and one of the architects of Scenario Dreamer™, a recent diffusion method for scenario generation. “This combination of world simulation systems accomplishes what is necessary.”

The Proof: Training and Testing

Using these layers of world simulation, neural scene rendering together with generated sequences with fully reactive agents, we repeatedly fine-tune and deeply test the virtual driver, as mentioned previously, an instructor teaching a “behind-the-wheel” student, by using reinforcement learning, to explore decision making and provide feedback at the same time.

Virtual driver is taught not only by providing the ideal road trajectory, but also by providing additional scene information so that the system learns to explicitly predict and, on top of that, to explicitly use the information for its final trajectory decision. This, in return, allows a more accurate training process, which, as a byproduct, helps us understand in more detail how the entire system behaves. That detail can then be fed back into the system.

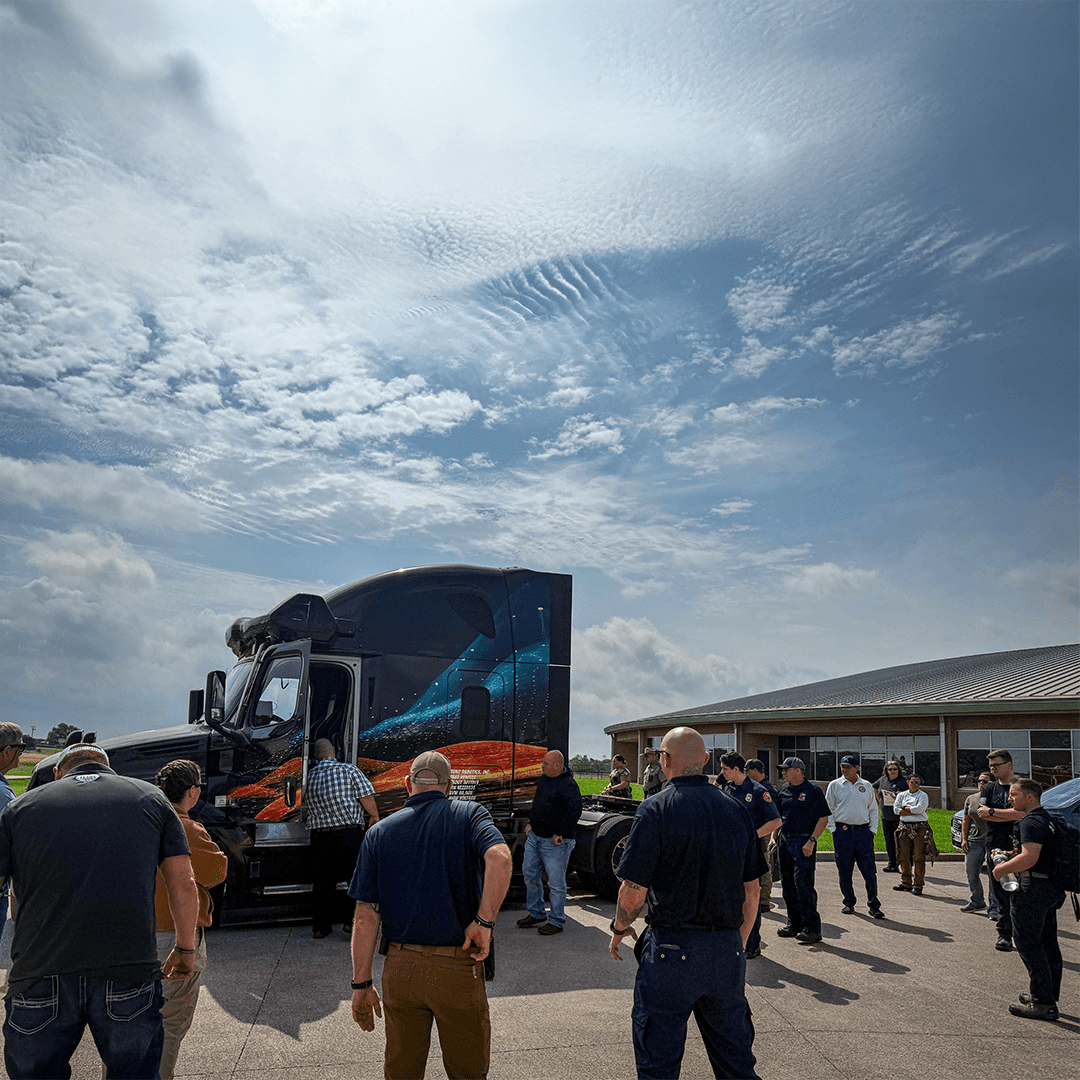

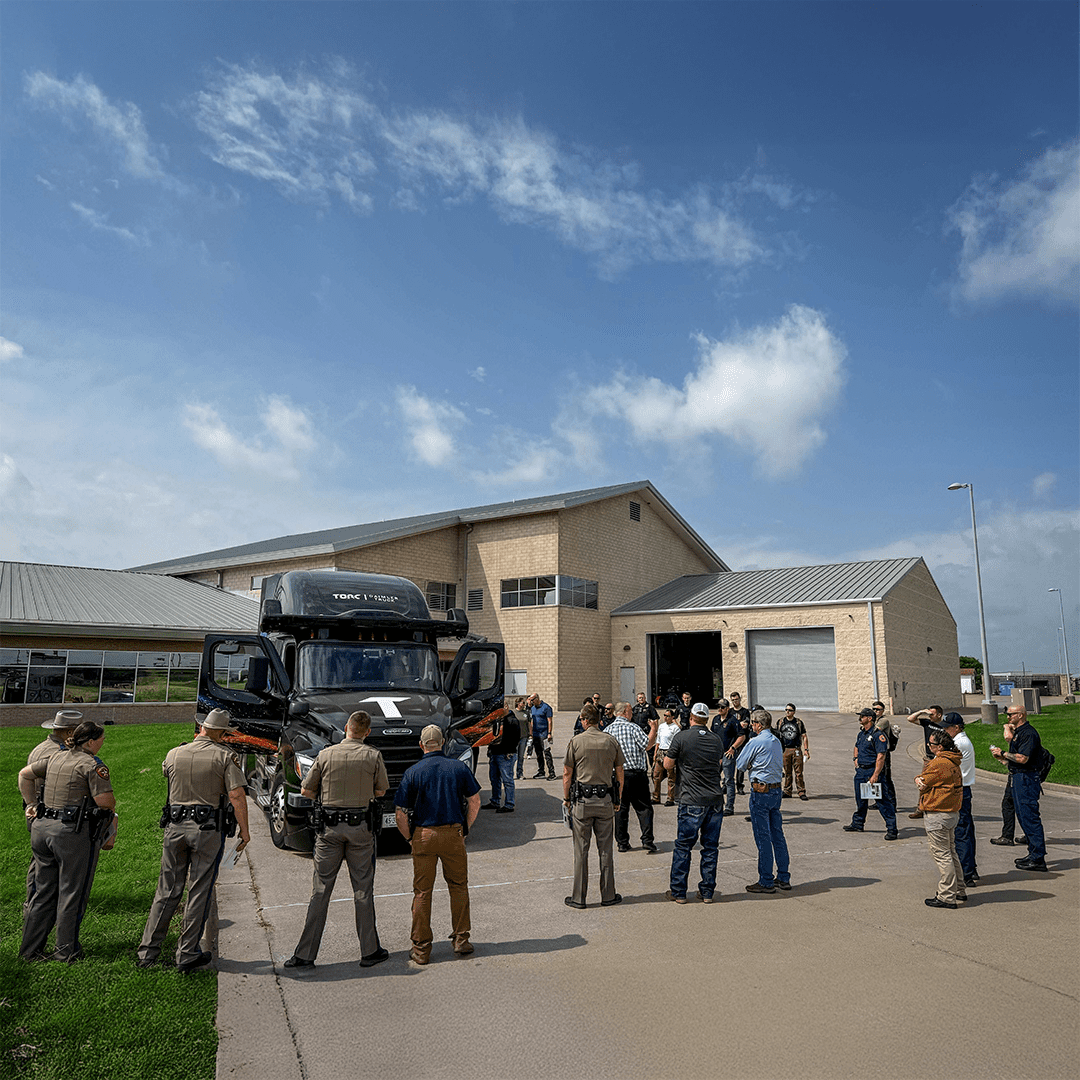

We apply all these techniques to create the data needed to deeply test the virtual driver behavior for the most unusual or unlikely corner cases, to give us high confidence of safe behavior on the road, i.e., that the training of our AI system and the virtual driver has been successful. After that confidence threshold is reached, we move the updated model out of the simulated environment and onto the trucks, validating on both closed course and public roads for every software release.

It’s this high-velocity AV 3.0 framework of data –> training –-> testing –> release that sets an industry benchmark in software development and deployment.

The End Result: Safe and Scalable

“End-to-end systems are critical for scalability. It allows us to be much more adaptable to new needs or to fix issues quickly, and because we have a modular transparent stack, we can see the issues independently,” says C.J. King, Torc Chief Technology Officer. “We can use our generative AI stack to generate the data to train the outcomes rather than train a camera-based object and distance detector, or lidar and radar object detection separately from the prediction and planning. It’s another layer of getting the technology ready for the road.”

This high velocity is critical not only to keep to our release schedule, but to ensure we can quickly enable our customers to scale to new routes and hubs. We can identify, triage, and deploy issues to engineering before we ever encounter them on the road. Additionally, issue resolution and fixes are done within days, not weeks. This turnaround means there can be multiple fixes and releases daily.

Why We Call It AV 3.0

Until we reach our product launch in 2027, we will continue to hone this technology, gather more real-world data (something we will always need), develop commercialization plans and hub designs, close our robust safety case, and solve a multitude of other necessary autonomous trucking puzzles. But AV 3.0 provides a clear path to how it can be done.

So, what’s next?

We’ve defined AV 3.0, the groundbreaking technology used to create, train, and test the virtual driver. What our virtual driver runs on, and how, is another industry-defining element setting Torc apart from the competition. We’re deploying this technology right now, not on prototypes or demonstration trucks, but on the trucks we will go to market with… on production-intent embedded hardware.

Transparency

AV 3.0 understands the state of the entire model and why it acted the way it did, both within individual modules and how it affected the entire end-to-end system. There’s visibility and understanding of what’s always going on inside the model at all times.

Explainability

AV 3.0 permits traceability and verifiability, providing us with the ability to see why and where our virtual driver did something. We can understand both what is detected and what reasoning the system made to determine the object and its meaning. This is crucial for the explainability of our end-to-end system and for our safety case.

Improved Accuracy

Introspection means the AI model is aware of its internal state, how it makes decisions, and its behaviors. Models can understand why they are doing something and can therefore be more accurate.

Let’s explain EXPLAINABILITY and IMPROVED ACCURACY together: AV 3.0 provides object detection outputs and information on the 3D world view our vehicle is in – Is that a car? At what velocity are other highway users travelling at? Is that an emergency vehicle? Where is the lane center? A traffic light? This level of detail allows us to understand what the virtual driver thinks in a certain situation, and we also know how it acts with that information.

Improved Safety

With full transparency of the entire model and individual modules, it is easier to monitor model behavior and verify the software from the inside and explain perception and decision making in full.